This article is about Data Science

The Future of Big Data: Data Parallelism

By NIIT Editorial

Published on 06/07/2023

In this day and age of digital technology, the quantity of data that is being created and saved is growing at an exponential pace. This has resulted in the birth of a concept known as "Big Data," which refers to the huge volumes of data, both organised and unstructured, that are being created by people, corporations, and other types of organisations all over the globe. Because of the enormous amounts of data that are being produced, it is essential to have efficient data processing strategies in place in order to conduct analyses using Big Data.

In this context, the concept of data parallelism has emerged as an important tool for the management of Big Data. The significance of data processing in Big Data analytics will be investigated in this paper, as will the role that data parallelism plays in Big Data processing. In the end, it will be argued that data parallelism is an essential component of Big Data processing that enables the efficient and effective analysis of massive amounts of data.

Table of Contents:

- What is Data Parallelism?

- Why is Data Parallelism Important for Big Data Analytics?

- The Future of Big Data and Data Parallelism

- Limitations and Challenges of Data Parallelism

- Use Cases of Data Parallelism

- Conclusion

What is Data Parallelism?

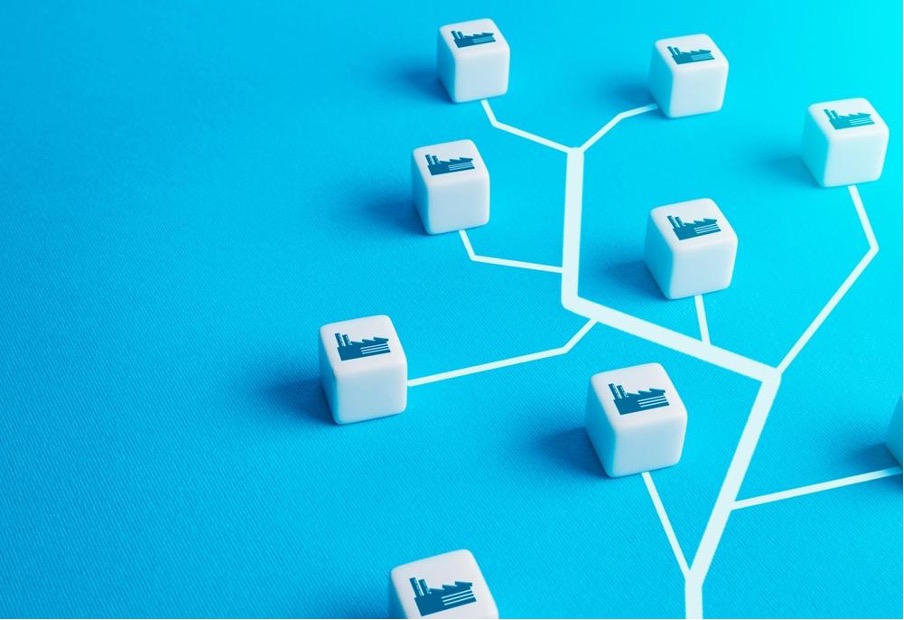

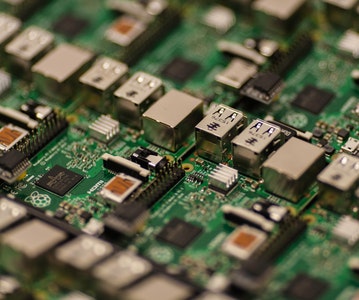

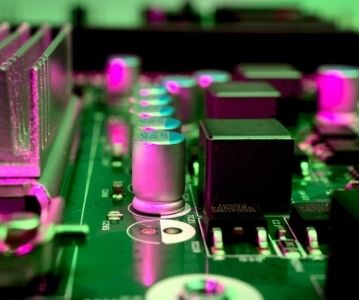

Big data processing sometimes employs a method called data parallelism, which entails slicing up massive datasets into smaller, more manageable pieces and then running those pieces through several computer nodes concurrently. In comparison to running them in sequence on a single computer, big datasets may now be processed efficiently in parallel.

The term "data parallelism" refers to the practise of breaking down large amounts of data into smaller chunks that may then be spread over a network of computers. The data is distributed across the computing nodes, and each node processes its share before contributing to the aggregate output. This method allows for numerous computer nodes to operate in tandem on the same job, enabling for massive volumes of data to be processed in a fraction of the usual time.

As the size of the dataset grows, more computer nodes may be added to the cluster to accommodate the increasing burden, which is one of the key benefits of data parallelism. This guarantees that Big Data processing can proceed effectively, despite the ever-increasing volume of data being created.

In addition to enhancing the dependability of data processing, data parallelism may reduce the likelihood of system failures and crashes by dividing the burden over numerous computer nodes.

Why is Data Parallelism Important for Big Data Analytics?

Big data processing sometimes employs a method called data parallelism, which entails slicing up massive datasets into smaller, more manageable pieces and then running those pieces through several computer nodes concurrently. In comparison to running them in sequence on a single computer, big datasets may now be processed efficiently in parallel.

The term "data parallelism" refers to the practise of breaking down large amounts of data into smaller chunks that may then be spread over a network of computers. The data is distributed across the computing nodes, and each node processes its share before contributing to the aggregate output. This method allows for numerous computer nodes to operate in tandem on the same job, enabling for massive volumes of data to be processed in a fraction of the usual time.

As the size of the dataset grows, more computer nodes may be added to the cluster to accommodate the increasing burden, which is one of the key benefits of data parallelism. This guarantees that Big Data processing can proceed effectively, despite the ever-increasing volume of data being created. In addition to enhancing the dependability of data processing, data parallelism may reduce the likelihood of system failures and crashes by dividing the burden over numerous computer nodes.

The Future of Big Data and Data Parallelism

Some of the latest developments in big data analytics include the proliferation of edge computing to facilitate real-time data analysis and the usage of cloud computing for data storage and processing.

By facilitating quicker processing, analysis of unstructured data, and more scalability, B. data parallelism has the potential to revolutionise the future of big data analytics. Errors and system breakdowns are less likely to occur, leading to more precise and trustworthy outcomes.

Data parallelism may be used with other big data technologies to build a robust big data infrastructure, including distributed storage systems like Hadoop and cloud computing platforms like Amazon Web Services (AWS). In addition, data parallelism in real-time streaming data processing may be enabled using technologies like Apache Spark and Apache Flink.

Data parallelism has great promise for the future of big data analytics. To be competitive, companies will need to harness the potential of data parallelism as the amount and complexity of the data created by these firms continues to grow. Data parallelism's usage will grow as more sectors embrace Big Data analytics, and we may anticipate more sophisticated implementations of this technology in the years to come.

Limitations and Challenges of Data Parallelism

There are several constraints to large data analytics, even though data parallelism has many potential benefits. High-performance computing systems or clusters, which provide massive amounts of processing resources, are one of the main bottlenecks. For other startups, the price tag might be too high to justify the risk.

Furthermore, data parallelism may not be optimal for sequential processing activities or other specific sorts of data processing workloads.

There may be difficulties in putting data parallelism into practise. When numerous nodes are processing the same data at the same time, maintaining data consistency and preventing data collisions is a major difficulty. When working with real-time data streams, this might be very difficult. Since the performance of the parallel processing system varies with the size of the dataset, the number of nodes in the system, and other parameters, optimising its performance is another difficulty.

Several approaches have been created in an effort to overcome these difficulties and restrictions. Data partitioning is one method for dividing a large dataset into manageable chunks that may be analysed in parallel. As a result, this may lessen the amount of processing power needed for data parallelism. System performance and data integrity may both be improved by using strategies like data replication and load balancing.

Lastly, because to developments in hardware technology and cloud computing, organisations may adopt data parallelism with less resources and at a lower cost.

Use Cases of Data Parallelism

Data parallelism has several practical applications, including the following:

1. Google's MapReduce

Distributed computing on a massive scale is made possible by this programming concept and its implementation. Data parallelism is used to divide up massive datasets into manageable pieces that can then be distributed across a cluster's many processors.

2. Apache Hadoop

This is a free and open-source software framework for handling massive datasets using MapReduce and other distributed computing strategies. Large datasets may be stored and processed in parallel over clusters of inexpensive hardware.

3. Netflix's Genie

This system is in charge of handling the large data processing and analysis for Netflix. In order to provide timely insights and enhanced user experiences, it employs data parallelism to rapidly process and analyse massive datasets.

Benefits Gained by Implementing Data Parallelism Include:

- Improved Performance and Speed: Processing times and overall performance may be decreased by using data parallelism, which divides huge datasets into smaller pieces that can be handled simultaneously.

- Scalability: Distributing processing jobs over numerous nodes in a cluster, as is feasible with data parallelism, enables the processing of considerably bigger datasets than would be possible on a single system.

- Cost Savings: Companies may save money on pricey hardware and software licences by developing their products using open-source software frameworks and employing commodity technology.

Examples of firms whose big data analytics procedures benefited from data parallelism include:

- Facebook: Hadoop is used by the social media giant to analyse and process massive information, which in turn improves user experiences and generates more relevant suggestions.

- Uber: Hadoop and data parallelism are used by the ride-hailing firm to process and analyse data from its ride-sharing service, resulting in greater efficiency and more informed decisions.

- LinkedIn: Hadoop and data parallelism are put to use on the professional networking site to process and analyse big datasets for deeper insights into user behaviour and more precise suggestions.

Conclusion

In conclusion, data parallelism has radically altered the landscape of big data analytics by eliminating bottlenecks in conventional data processing. It allows for quicker and more efficient data processing, improving the quality of choices made by organisations based on analysis of massive data sets. The significance of data parallelism will only rise as the volume of available data continues to expand exponentially.

Using data parallelism in your big data analytics operations is crucial if you want to remain ahead of the competition. Data parallelism and other cutting-edge technology in the area of big data analytics may be learned by enrolling in a data science course. Don't procrastinate any longer; sign up for a data science course right now and start unlocking your data's true potential.

Sign Up

Sign Up