This article is about Game Development

Understanding Audio for Web Games

By NIIT Editorial

Published on 30/07/2021

8 minutes

Web games, commonly known as browser games, are games played over the web. These games are played directly through the web browser, frequently with the assistance of a plugin. These games are generally created in Adobe Flash, Unity, or HTML5.

One of the main components of web games is sound effects. Sound effects provide feedback of the surroundings of the game to players, help them in engaging with the inside virtual realm and provide an adventurous experience. These things in total bring a successful gaming experience here. The sounding effects add a sense of warmth and familiarity to the line of codes and pixels of coloured lights. Even with 3D and hyper-realistic images, the player only looks at pixels; the sound then brings a “real” sense of experience. However, these sound effects are not "real" weapon fire or punches but recordings from various sources. To learn more about web games check out the NIIT game developer course.

The purposes of sound effects include:

- Setting the mood: Sound effects can help set the suitable mood of a game ranging from easy button presses to ambience tracks.

- Adding realism: Sound effects of weapons, aircraft, and vehicles are specially designed to give a real experience to the players.

- Enhancing entertainment value: Nothing is as satisfying as hearing earth-shattering explosions, gunshots, or car crashes in direct consideration of your actions.

- Establishing brand identity: High-quality visuals and different sound effects all together are responsible for an incredible gaming experience. These effects create a fresh and unique identity for every particular game.

Game Audio on Web

To create satisfying game music, designers need to modify the potentially unexpected game state players find themselves in. There can be countless sounds playing at once in a game, all of which need to sound good collectively and should be generated without introducing performance penalties. For easy games, using the <audio> tag is sufficient for the process of game development. Online courses for game design can give you more insight on the same.

- Background Music

All games have background music playing in continuation. So it is essential to have mixes of various sound effects of different frequencies that gradually cross-fade into each other, depending on the context of the game. For instance, in a game about epic battles, you might experience several mixes differing in the emotional range from atmospheric to reflecting to intense. Music synthesis software allows the export of several mixes (of the same length) based on selecting the set of tracks to use in the export. That way, we achieve some internal consistency and prevent having vibrating transitions as the cross-fade from one track to another is done.

Then, with the help of the Web Audio API, we can import all of the samples using something like the BufferLoader class via XHR. Loading sounds is a time-consuming process, so all the properties used in the game should be loaded on page at the start of the level.

The next task is to establish a source for each node and a gain node for each source, and connect the graph.

After accomplishing this, we will be able to playback all of these sources simultaneously on a loop, and since they are all of the same lengths, the Web Audio API will ensure that they will remain aligned. As the character gets closer or distant from the final battle, the game can modify the gain values for each of the respective nodes in the chain, using a gain amount algorithm like the following:

Two sources are played at once in the above method, and we cross-fade between them using equal power curves.

- The missing link: Audio tag to Web Audio

Many game developers use the <audio> tag for their background music because it is very satisfying to streaming content. Now we can easily bring content from the <audio> tag into a Web Audio context.

This technique can be very beneficial since the <audio> tag can work with streaming content, which lets the user to immediately play the background music instead of having to wait for it all to download. By generating the stream into the Web Audio API, we can manipulate or evaluate the stream. The following example refers to a low pass filter to the music played through the <audio> tag:

- Sound effects

Games have various sound effects in response to user input or changes in the game state. Therefore, it is essential to have a pool of similar but different sounds to play during the game to avoid disturbance. Sound effects can vary from soft variations of footstep samples to intense variations. Another important element of sound effects in games is that there can be many of them continuously. Many game making courses can provide you more information on these.

The following example generates a machine gun round from multiple individual bullet samples by creating multiple sound effects.

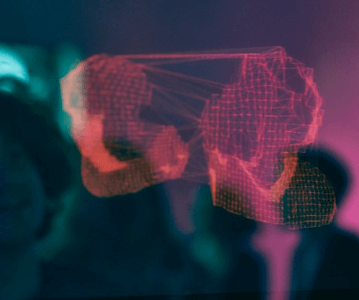

- 3D positional sound

For games set in a world with some geometric features, either in 2D or in 3D, stereo positioned audio can incredibly increase the immersive experience. Web Audio API has built-in hardware-accelerated positional audio features that are quite simple to use.

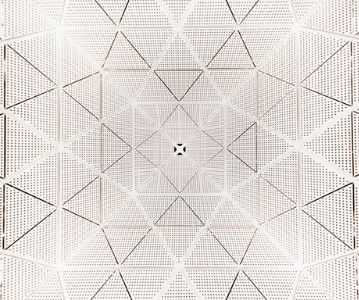

In the following image, we can change the angle of the source by simply scrolling while the mouse is over the canvas.

In the above image, there is a listener (person icon) in the centre of the canvas, and the mouse influences the position of the source (speaker icon). It is a simple example of using AudioPannerNode. For a hands-on experience, you can check out NIIT game development courses.

The main idea of the above sample is to respond to mouse movement by establishing the position of the audio source, as follows:

- Room effects and filters

In reality, the sound perceived is based on the room in which that sound is heard. Games with high production value have numerous sound effects since building a separate set of samples for each environment is extremely expensive that leads to even more assets and a larger amount of game data.

Many sites host the pre-recorded impulse response files (stored as audio) to save data.

The Web Audio API provides a very easy way to apply these impulse responses to our sounds using the ConvolverNode.

Endnote

The Web Audio API is a high-level JavaScript API for processing and producing audio in web applications. The major goal of this API is to include potentials found in modern game audio engines and mixing processing and filtering methods that are found in modern desktop audio production applications. Sound effects have a remarkable influence on the overall gaming experience. A game may be able to get by without music or dialogue, but not without sound effects; it will be very disappointing.

Game Development Course

Becoming a Game Developer: A Course for Programmers. Design and Build Your Own Game using Unity.

Job Placement Assistance

Course duration: 20 weeks