This article is about Machine Learning

Getting to know Deep Learning? Essential Things that You should Know!

By NIIT Editorial

Published on 05/05/2021

8 minutes

This era has witnessed drastic developments in technology. While automation has replaced most of the manual tasks, technologies like voice recognition systems, artificial intelligence, virtual reality, etc. have revolutionized the whole technological world. Machine Learning has certainly prompted a fast growth in the availability of programming tools and techniques that have become the center of attraction for data scientists. This theme also reflects in NIIT’s flagship data science career programs, the likes of which are being fancied by future-ready learners:

- Advanced Post Graduate Program in Data Science and Machine Learning (Full Time)

- Advanced Post Graduate Program in Data Science and Machine Learning (Part time)

- Data Science Foundation Program (Full Time)

- Data Science Foundation Program (Part Time)

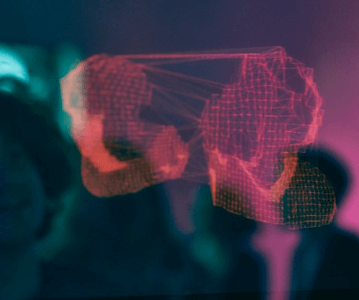

Machine Learning holds various subfields under its umbrella, Data Learning is one of them. Data Learning deals with the algorithms that are inspired by artificial neural networks - the structure and function of the brain.

This fragment of machine learning can be a little challenging to understand for novices. All of us must have witnessed such neural experiences many times but might not connect them with deep learning. Especially concepts like hidden layers, backpropagation, convolutional neural networks can be complex to understand if you have dived in initially. Need not worry, in this blog, we will discuss deep learning from the basics for a newbie to understand.

To understand more quickly, Deep learning can be mainly segregated into the following segments:

- Getting your system ready

- Python programming

- Linear Algebra and Calculus

- Probability and Statistics

- Key Machine Learning Concepts

Getting your system ready

Tools are the first thing that we require to adopt a new skill. While basic knowledge of your operating system (Windows, Linux, or Mac) will come in handy, knowing about the computer hardware is also a plus.

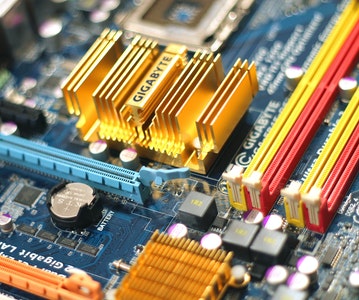

Graphics Processing Unit (GPU): GPU facilitates your journey in deep learning by helping you in working with the image and video data. Though a deep learning project can also be built on a laptop or Personal Computer, it won’t be that effective as compared to the one having GPU. Some of the advantages of using a GPU are;

- GPU enables parallel processing

- The combination of CPU and GPU saves time

- GPU can handle complex tasks

Worrying that investing in GPUs can be too much? No worries, as you can easily take help from cloud computing resources. They either provide GPUs for free or at a very negligible price. Also, some of the GPUs come preinstalled with some basic practice datasets and preloaded tutorials. Paperspace, Google Colab, Gradient, and Kaggle Kernels are some of them. Conversely, GPUs like Amazon Web Services EC2 require full-fledged servers and step-to-step installation and customization.

Tensor Processing Units: Deep learning has also opened ways for Google to develop their kind of processing units that exclusively caters to build neural networks and deep learning tasks – all of this comes under TPUs.

TPUs are co-processors that are used with the CPU. They are generally cheaper than GPUs and are quicker in making deep learning models cost-effective. Google Colab offers free usage of the cloud version of TPU.

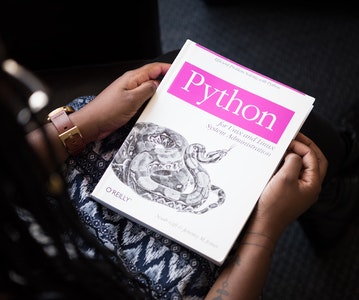

Python Programming:

After getting a hang of the basics, now you need to learn the main programming tool, Python which is commonly used across industries to conduct deep learning. To avail of the additional functionalities, one must turn to the ‘libraries in Python’. These libraries consist of several tools known as functions that are used in programming. You need not be a hardcore coder for Deep Learning, knowing the basics would also work. Anaconda is one such framework that enables you to track your python versions and libraries.

Linear Algebra and Calculus:

It is a quite common misconception that to get through Deep Learning, you need to know advanced levels of linear algebra and calculus. Incorrect! Knowing school-level algebra also works. Some of the key terms are a) Scalars and Vectors b) Matrices and Matrix operations.

Scalars and Vectors: Scalars constitute of magnitude and vectors are made up of both direction and magnitude. These are subclassified into two fields: Dot product and Cross product.

Matrices and Matrix operations: An array of numbers that is in the form of rows and columns is a matrix. Operations on matrices can be performed just by adding or subtracting just like normal numbers. The multiplication and division are a bit different than the usual ones.

Scalar Multiplication: When a single scalar value is multiplied with a matrix, a scalar is multiplied with all the elements of the matrix.

Matrix Multiplication: By matrix multiplication we mean, calculation of the dot product of the rows and columns while creating a new matrix with diverse dimensions.

Transpose of the matrix: The rows and columns are swapped in this matrix to get a transpose.

The inverse of the matrix: Just like inverting numbers, the matrix is multiplied with the inverse number that will give the result of an identity matrix.

Calculus: Since deep learning is fundamentally involved with quantitative data and complex ML models, working with both can be a bit expensive in terms of time and resources. For this reason, it is vital to optimizing the machine learning model as it can predict accurate results without using much time and other resources.

Probability and Statistics for Deep Learning

Just similar to Linear Algebra, Probability and Statistics own a separate world in the field of mathematics. Beginners might find it quite intimidating and even for seasoned data scientists, it is sometimes challenging to recall advanced statistical concepts.

Nevertheless, we cannot deny that Statistics is the backbone of both Machine and Deep Learning. Probability and Statistics have such concepts that are highly imperative in the industry. As the interpretability of the deep learning model is foremost.

Let’s understand probability through an example. If one student is picked out of thousand students, what are the possibilities that the student will be passing the test? Here is when the concept of probability will help you. If the probability is 0.6 then there are 60% chances that the student has passed the test (based on the assumption that passing marks are 40).

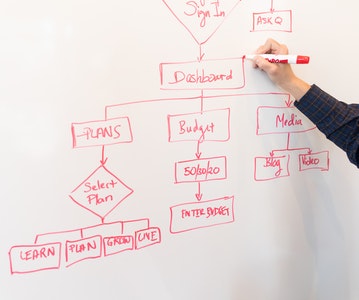

Key Machine Learning Concepts

One good thing about Machine Learning Algorithms is that you don’t need to dive into the complex and detailed concepts completely to start the deep learning process. Once you get the hang of it initially, you can learn it in detail. Below mentioned are some of the basic yet important concepts that will help you in building the foundation of the deep learning process.

Supervised learning algorithm: There are two variables- one is the target variable that we want to predict and the other is input variables that have the independent features to contribute to the target variable. An equation is then generated while stating the relationship between the input and the target variables. Some of the examples are kNN, SVM, Linear Regression, etc.

Unsupervised learning: In this type of learning algorithm, the target variable is completely unknown. This is used to only form a cluster of the data into groups so that later one can identify the data in groups. Some of the examples of unsupervised learning are the apriori algorithm, k-means clustering, etc.

Evaluation Metrics

While building an effective model is important in deep learning, one needs to also keep evaluating the improvements from time to time to reach the best model.

But how to judge a deep learning model? Evaluation Metrics is at your rescue. Different tasks have various evaluation metrics for regression and classification.

Evaluation metrics for Classification:

- Confusion Matrix

- Accuracy

- Precision and Recall

- F1-score

- AUC-ROC

- Log Loss

Whether it is a research domain or an industry domain, evaluation metrics are highly important for your deep learning model. As the judgment depends on the same.

Validation Techniques

As discussed earlier, a deep learning model can train itself on the given data but checking the improvement of the model is highly required. The true spirit of the model can be thoroughly verified via inputting new data.

However, improving the model can be time-consuming and costly too. Here is when Validation steps in. The entire process is divided into three parts – training, validation, and training. This is how it goes – The model is first trained on the training set, improved in the validation step, and finally predicted on the unseen test set. K-fold cross-validation and leave-one-out cross-validation (LOOCV).

Conclusion

In a nutshell, the deep learning process can be a lot easier if we move step by step. Deep learning is just huge neural networks working on a lot more data that require bigger computers. Then we understood the 5 vital things that assist the deep learning process. Some of the popular deep learning frameworks are PyTorch and TensorFlow that you can explore. They have built-in Python programming that will let you have an easy grasp of Python as well. Once you are done with these, some advanced terms like Hyperparameter, Backpropagation, and Tuning will be in your way to be well-versed in deep learning.

Advanced PGP in Data Science and Machine Learning (Full Time)

Become an industry-ready StackRoute Certified Data Science professional through immersive learning of Data Analysis and Visualization, ML models, Forecasting & Predicting Models, NLP, Deep Learning and more with this Job-Assured Program with a minimum CTC of ₹5LPA*.

Job Assured Program*

Practitioner Designed