This article is about Artificial Intelligence

Understanding the State of Natural Language Processing (NLP)

By NIIT Editorial

Published on 11/09/2021

6 minutes

Being a subfield of linguistics, artificial intelligence and computer science, Natural language processing (NLP) is associated with the interactions between human language and computers. This interaction especially involves programming the computer in a way that processes and analyses a vast amount of natural language data. The interaction’s result is the capability of the computer to understand the contents and contextual nuances of the documents. The technology can then precisely extract the required information in the documents that categorizes and organizes itself.

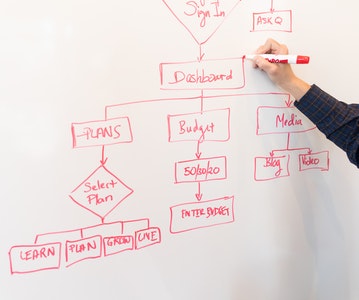

An interaction between a human and a computer goes as below:

- A human speaks to the Computer

- Computer records the audio

- Audio gets converted into text

- Text data gets processed

- Conversion of Processed data to audio

- The computer responds to Humans by playing the audio.

Simply stating, Natural Language Processing is the process by which the computer reads and understands natural human languages in a meaningful manner.

History of Natural Language Processing

A renowned mathematician Alan Turing, in 1950, proposed a test to test the intelligence of a machine. To pass the said test, the machine had to be capable of holding a conversation that would be identical to a human’s conversation. Apart from the machine’s ability to think, Turing was more focused on knowing the computer’s ability to respond and follow the conversation appropriately to such an extent that its intelligence should surpass the automation. A French philosopher Denis Diderot, who existed 200 years before Turing, said, “If they find a parrot who could answer everything, I would claim it to be an intelligent being without hesitation.”

The whole idea of Turing behind this test depicts that Natural language Processing is a frontier for Artificial Intelligence. Though, what is there in history is completely different from the modern approach of processing and analysing the data.

Uses of Natural Language Processing

When NLP is on its basic level, it does not require Artificial Intelligence and Machine Learning. The auto-correct function which all the modern phones are embedded with runs on the principles of Natural Language Processing only. It is a simple rule-based way to analyse and detect natural language by comparing written text with a database of a dictionary. One of the other examples of NLP is automatic filtering. This is a useful feature for the media vendors and social media creators who can avoid hate speech and swearing by swapping out some words with normal ones.

Furthermore, NLP can be used in the applications like Autocorrect and autocomplete Google Translate, MS words or Grammarly to check the accuracy of Grammar in texts, Interactive Voice Response (IVR) Apps in call centres, Ok Google, Siri, Cortana and like personal assistant applications, chatbots etc.

How does Natural Language Processing work?

Natural language is very difficult to decipher and understand because it is high- levelled and abstract. The usage of sarcastic comments in the language is one example of it that makes it difficult for a computer. Understanding the human language requires an understanding of words spoken by humans, the concepts, and the way these are interconnected to deliver an intended message.

Natural human language is in the form of unstructured data. With the help of algorithms, this unstructured data is converted into the language that the computer understands. Sometimes, the computer may fail to understand it which will lead to absurd results.

Techniques used in Natural Language Processing

Following are the techniques used in NLP:

- Syntax

- Semantics

Syntax

It refers to the arrangement of words so that it makes the sentence grammatically correct. Algorithms are used to apply rules of grammar to sentences and derive meaning from them.

Some of the syntax techniques that can be used are listed below:

- Lemmatization: Reduction of the various inflected forms of a word into a single form for easy analysis.

- Morphological segmentation: Division of words into individual units which are known as Morphemes.

- Word segmentation: Dividing a large piece of continuous text into small distinct units.

- Part-of-speech tagging: identification of the part of speech for every word.

- Parsing: Grammatical analysis for the provided statement.

- Sentence breaking: Putting sentence boundaries on a large piece of data and analyse.

- Stemming: Inflected words should be kept in their original forms.

Semantics

It refers to the meaning that is intended by the sentence/statement. It is one of the difficult analysis of NLP.

Some techniques that are used in semantic analysis:

- Named entity recognition (NER): Determining the preset groups in a statement. Preset groups would mean a set of words that can be identified and categorized.

- Word sense disambiguation: Understanding the meaning of the text basis the context.

- Natural language generation: Usage of databases to understand the intention of the text.

NLP business applications

Despite some drawbacks, NLP is the talk of the town. It is growing swiftly and attracting businesses. The best benchmark of NLP’s current state is an automated translation (AT). It tends to misinterpret words and translations that usually leads to a comic effect for the users. Knowingly or unknowingly the maximum population, especially millennials and gen Z, are using NLP every day. The mobile apps that can just translate text out of a video in real-time are in buzz. An incident in Britain happened where the judiciaries had to rely on Google translate for a Mandarin speaker Xiu Ping Yang.

Not only this, but Natural Language Processing is also an aid to amplify customers’ experience. One of the popular examples of this is chatbots. According to a survey by Walker, around 88% of buyers are ready to pay more for an enhanced customer experience. This knowledge compelled the pizza giant Domino’s to provide a chatbot for the customers’ help. They can now easily order pizza via Google Home or Facebook messenger. Similarly, the Royal Bank of Scotland states that after introducing chatbots, the usage of mobile applications has decreased by 20%. Nonetheless, not all chatbots need much Artificial Intelligence. Some chatbots are programmed with predefined standard answers like inquiring about the store to open or the procedure of ordering a product.

Reputation monitoring

Sentiment analysis and online reputation monitoring are such tasks that require the ability to identify particular words in a sentence while understanding the whole sentence. While designing a solution for the United Nations Office of Information and Communications Technology, deepsense.ai faced some obstacles.

The occurrence of fake news has become a recurrent and major concern nowadays. Both the government and the companies should consistently be updated with what news and stories have been published online. All thanks to NLP that it is not possible to analyse the continuous flow of language-based social media’s data and determine whether the news is fake or genuine.

The legal intricacies

A forefront example regarding NLP is of the consultancy giants PwC, Deloitte and EY. They use the NLP technology to keep a check on a large number of compliances or contracts with lease accounting standards. All of the said information including the emails, text-based data and conversations are unstructured.

Gartner, a research and advisory giant, has estimated that 80% of the data to date is unstructured. Here is when NLP comes to the rescue. It is one of the effective and powerful tools that analyses and gathers meaningful insights. According to research by the University of Rome, the adoption of NLP techniques has resulted in 12% less spending of effort in classifying equivalent requirements.

The NLP market is envisaged to reach up to $22.3 billion by the year 2025. The chief driver that accelerates this growth is – brainstorming on and designing new and scalable solutions for real-life problems for the customers as well as the businesses.

Future Potential of NLP – word embeddings and grammatical gender

Fasttext and word2ved have got the hang of making words understandable to computer systems. Under these models, each word that is on the internet is highlighted with a multidimensional vector marking along with its meaning and connotations. This is commonly called word embedding. For example: Vectors of a kitty, cat and kitten are similar to each other but are dissimilar to that of a car, telephone or computer. With that being said, it becomes easier to make the model better.

The main difference that image recognition and NLP have is that image recognition allows a model to use additional images. However, NLP already has pre-described words in its network and hence further explanation is not required.

However, it completely depends on the model and the skills of data scientists to perform relevant tasks with the given data. According to that, we can compare word embeddings to the pre-trained image recognition network’s first layers.

Due to the extremely contextualized data, it analyzes, Natural Language Processing (NLP) can sometimes face some challenges. Since language is a blend of culture, history and information, the ability to understand it is imperative.

Advanced PGP in Data Science and Machine Learning (Full Time)

Become an industry-ready StackRoute Certified Data Science professional through immersive learning of Data Analysis and Visualization, ML models, Forecasting & Predicting Models, NLP, Deep Learning and more with this Job-Assured Program with a minimum CTC of ₹5LPA*.

Job Assured Program*

Practitioner Designed