This article is about Cloud Computing

A Detailed Guide On Auto Scaling In Cloud Computing

By NIIT Editorial

Published on 19/11/2021

7 minutes

The dynamic allocation of computational resources is easier now than ever, and this has been made possible with a cloud computing technique known as auto-scaling or automatic scaling. It is common for the number of active servers in a server farm or pool to automatically vary as the user and a cloud provider need to fluctuate based on the load.

Load balancing and auto-scaling are related due to the typical feature of applications that scale by balancing their serving capacity. The serving capacity of the load balancer is one of several metrics (including cloud monitoring metrics and CPU utilization) that determine auto-scaling policy. You can learn more about it from any of the best online DevOps or Cloud Computing training.

Explaining Auto Scaling in Cloud Computing

Cloud autoscaling allows organizations to scale a cloud service, such as virtual machines and server capacities up or down automatically based on factors such as traffic or utilization. Autoscaling tools are available from cloud computing providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

Additionally, autoscaling provides lower-cost, reliable performance by seamlessly resizing and downsizing instances as demand fluctuates. In this way, autoscaling maintains consistency despite the dynamic and, sometimes, unpredictable nature of app demand.

The overall benefit of autoscaling is that it does not require manual intervention to react in real-time to traffic spikes that entail adding new resources and instances as the number of active servers changes automatically.

It is essential to configure, monitor, and decommission each server as part of autoscaling. Any of the available online training for DevOps or cloud computing courses provide a detailed insight on it. An autoscaling database scales up or down, starts up, and shuts down automatically according to the application’s needs.

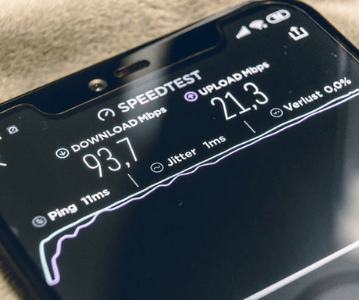

For instance, it’s difficult to recognize such a spike resulting from a distributed denial of service (DDoS) attack. If you monitor the auto-scaling metrics of the system more efficiently and the policies are in line, then the system could respond much more quickly.

Autoscaling: How Does It Work?

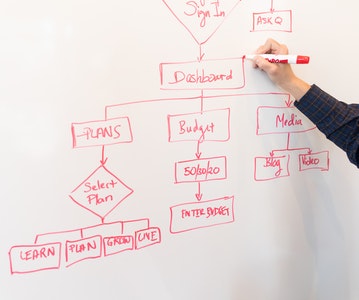

Based on the platform and resources a business uses, autoscaling works in a variety of ways. Compute, memory, and network resources are allocated by creating a virtual instance type with predefined performance attributes and a specified capacity. Several standard features allow automatic resource scaling across all autoscaling approaches.

Launch configurations, or baseline deployments, are often described in that way. For typical day-to-day operations, launch configurations are typically set up with options based on what a user expects to need for a workload. For example, CPU, memory, and network requirements generally are determined based on users’ expectations.

Implementing an autoscaling policy enabled by an autoscaling technology or service makes adding extra resources such as traffic spikes stress resources possible. This allows a user to define their desired capacity Constraints. A network autoscaling policy, for example, can allow the service to automatically scale up to a specified maximum amount in response to demand from a launch configuration configured with a baseline amount of spare capacity.

In autoscaling technologies or services, it is possible to deliver extra resources in different ways. A service may provide more capacity to a resource automatically sometimes. The autoscaling policies may launch additional resources added to the pool of virtual instances already deployed by a user to handle traffic spikes.

The Purpose Of Autoscaling

Cloud service and application workloads and services can be autoscaled based on different conditions to provide optimum performance and accessibility. With no autoscaling, resources are strictly defined within a configuration for a particular set of resources.

An organization that needs to process a large analytics workload might require more memory and compute resources than initially expected. Compute, and memory can automatically scale to process the data in a data center when an autoscaling policy is in place. A cloud provider can also ensure service availability by autoscaling.

In the event of a traffic spike, such as Black Friday shopping, there can be a substantial change in the usual pattern of activity on a service site. One organization might set up an initial set of instance types and expects to handle a particular service’s traffic. When a data center service experiences a traffic spike, autoscaling can provide the resources to continue while catering to customer needs efficiently.

The Types Of Autoscaling

There are three types of autoscaling. In order to gain the specifications of it, you can choose to find the best online cloud training or computing courses:

Reactive: You can scale resources up and down based on traffic spikes using a reactive autoscaling approach. The autoscaling approach is closely linked to the real-time monitoring of resource utilization. You elevate the resources even when there is a reduction in traffic so that any additional spikes in traffic can be dealt with. There is often also a cooldown period involved.

Predictive: An autoscaling approach that uses machine learning and artificial intelligence analyzes traffic loads and predicts when more or fewer resources are required.

Scheduled: Scheduled approaches allow users to specify when they will add resources. Before a significant event or during the peak hours in a day, you can leverage the spike in demand by pre-provisioning the resources rather than waiting for the scale-up to take place.

Wrapping It Up!

By configuring Automatic Scaling, you can easily configure your application's scalable resources to automatically scale in minutes with a cloud provider from a data center. With the AWS Auto Scaling console, one can use multiple services in the AWS Cloud service with a single user interface for auto-scaling. It is possible to configure the Automatic scaling for single resources or entire applications.

Cloud Computing and SysOps Program

Become an industry-ready StackRoute Certified Cloud IT Administrator and get skilled to acquire all the critical competencies required to take on the role of a Cloud IT Administrator including building strong foundations as Systems administrator. This is a Placement Assured Program* with a minimum CTC of ₹ 4LPA*

Placement Assured Program*

12 Weeks Program